"Singular Value Perturbation and Deep Network Optimization", Rudolf H. Riedi, Randall Balestriero, and Richard G. Baraniuk, Constructive Approximation, 27 November 2022 (also arXiv preprint 2203.03099, 7 March 2022)

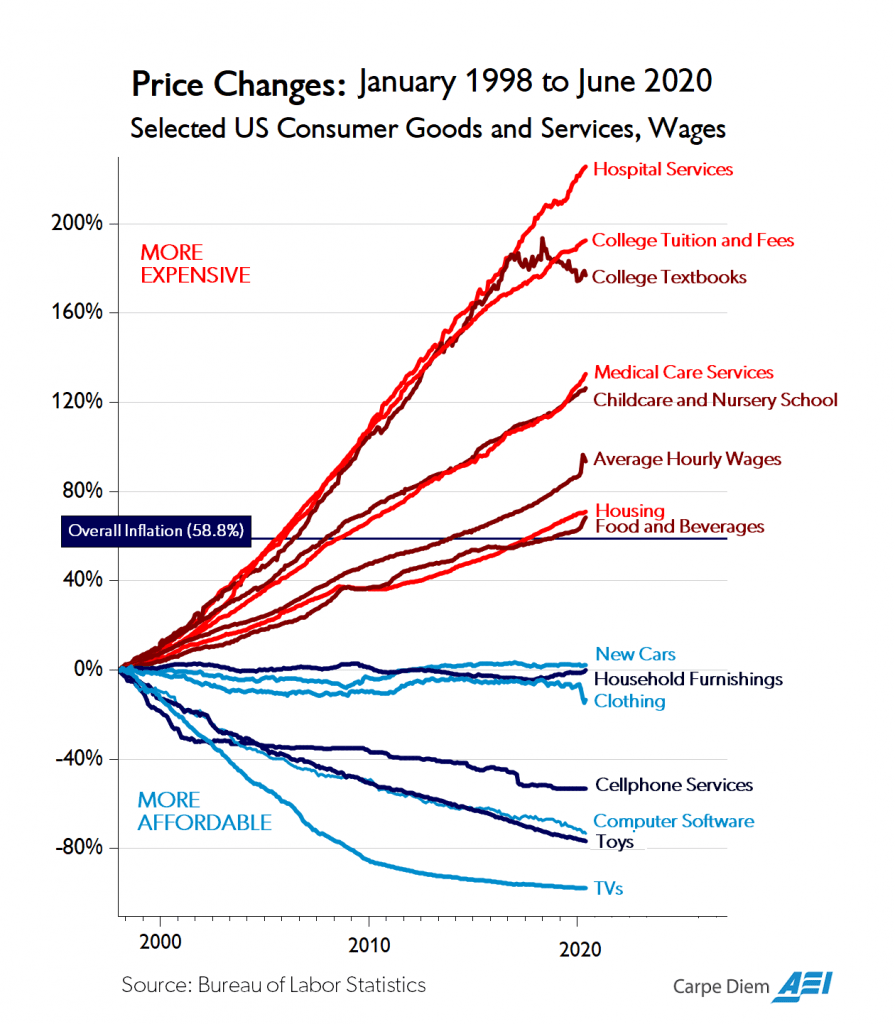

Deep learning practitioners know that ResNets and DenseNets are much preferred over ConvNets, because empirically their gradient descent learning converges faster and more stably to a better solution. In other words, it is not what a deep network can approximate that matters, but rather how it learns to approximate. Empirical studies have indicated that this is because the so-called loss landscape of the objective function navigated by gradient descent as it optimizes the deep network parameters is much smoother for ResNets and DenseNets as compared to ConvNets (see Figure 1 from Tom Goldstein's group below). However, to date there has been no analytical work in this direction.

Building on our earlier work connecting deep networks with continuous piecewise-affine splines, we develop an exact local linear representation of a deep network layer for a family of modern deep networks that includes ConvNets at one end of a spectrum and networks with skip connections, such as ResNets and DenseNets, at the other. For tasks that optimize the squared-error loss, we prove that the optimization loss surface of a modern deep network is piecewise quadratic in the parameters, with local shape governed by the singular values of a matrix that is a function of the local linear representation. We develop new perturbation results for how the singular values of matrices of this sort behave as we add a fraction of the identity and multiply by certain diagonal matrices. A direct application of our perturbation results explains analytically why a network with skip connections (e.g., ResNet or DenseNet) is easier to optimize than a ConvNet: thanks to its more stable singular values and smaller condition number, the local loss surface of a network with skip connections is less erratic, less eccentric, and features local minima that are more accommodating to gradient-based optimization. Our results also shed new light on the impact of different nonlinear activation functions on a deep network's singular values, regardless of its architecture.

Rice DSP PhD

Rice DSP PhD  Richard Baraniuk has been elected to the

Richard Baraniuk has been elected to the