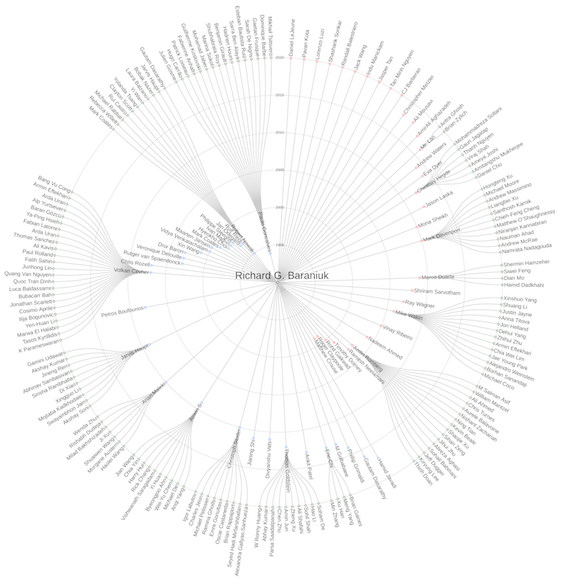

D. LeJeune, H. Javadi, R. G. Baraniuk, "The Implicit Regularization of Ordinary Least Squares Ensembles," arxiv.org/abs/1910.04743, 10 October 2019.

Ensemble methods that average over a collection of independent predictors that are each limited to a subsampling of both the examples and features of the training data command a significant presence in machine learning, such as the ever-popular random forest, yet the nature of the subsampling effect, particularly of the features, is not well understood. We study the case of an ensemble of linear predictors, where each individual predictor is fit using ordinary least squares on a random submatrix of the data matrix. We show that, under standard Gaussianity assumptions, when the number of features selected for each predictor is optimally tuned, the asymptotic risk of a large ensemble is equal to the asymptotic ridge regression risk, which is known to be optimal among linear predictors in this setting. In addition to eliciting this implicit regularization that results from subsampling, we also connect this ensemble to the dropout technique used in training deep (neural) networks, another strategy that has been shown to have a ridge-like regularizing effect.

Above: Example (rows) and feature (columns) subsampling of the training data X used in the ordinary least squares fit for one member of the ensemble. The i-th member of the ensemble is only allowed to predict using its subset of the features (green). It must learn its parameters by performing ordinary least squares using the subsampled examples of (red) and the subsampled examples (rows) and features (columns) of X (blue, crosshatched).